Each month, around 60% of Americans talk to customer service. It’s a $350B industry that keeps growing – along with customer dissatisfaction.

Enter generative AI. As its capabilities increase, companies have an opportunity to use it to improve customer service experiences. There are lots of advantages: a customer service chatbot or virtual agent can be available 24/7, field many inquiries at a time, and respond faster than a person, deflecting simple requests and freeing up your human agents to add value to your business in other ways.

However, the specter of those old chatbots is a major barrier. In a 2022 survey, 72% of respondents said that customer service chatbots were a waste of time, 80% said they were more frustrated after the interaction, and 78% said they still had to talk to a person to resolve their issue at the end.

This is an artifact of the ‘state-based’ way chatbots were built before AI. For product managers, these models are inherently problematic: they’re out of date almost as soon as they’re live, and the ‘if/then’ flows they’re coded for lengthen the time to value. As a result, both businesses and consumers hate them.

With a legacy like that, users may need coaxing, or even retraining, to start using and valuing the next generation of customer service chatbots. The good news: behavioral science can help. By using the strategies we share in this article, you can not only increase adoption of your AI-powered customer service chatbot and reduce escalations to live agents, but also create winning experiences that radically enhance customer satisfaction. Here’s how.

Use Anchors and Comparisons

As the joke goes: the way to outrun a bear is just to be faster than the person running behind you. Your customer service bot or virtual agent doesn’t have to be perfect. It just has to be better than the alternative and better than the chatbots of old.

The Behavioral Science Insight

Value is not inherent. People compare to other offerings to decide how much better or worse an option is, and they make decisions based on context. As humans, it’s basically impossible for us to make judgments in a vacuum. We’re more likely to vote for the first name we see, offerings look like a better deal when they seem exclusive, and scents are sweeter if they come after a bad smell.

You may have heard the happiness advice to spend your money on experiences rather than material goods – it turns out that even this advice might be based on the fact that it’s harder to compare experiences and so in turn, they don’t end up being compared unfavorably to something else.

If you can’t stop people from making comparisons (and you can’t, we promise), then it becomes very important to understand what comparisons people are making and where their reference points are set.

How to Do It

Here are two ways you can leverage the principle of relativity. We recommend using them in tandem:

1) Play up the positive contrast between your AI-enabled chatbot and previous state-based chatbots. Leverage novelty cues and explicitly address people’s negative expectations from the past. Use obvious, attention-grabbing words like ‘new‘ that capture customers’ attention and interest.

However, In some cases, ‘new’ may not be enough. To disrupt old associations, you may need a whole name change and fresh start: RIP ‘New Bing‘ which relaunched as Copilot to overcome perceived negative customer associations with ‘old’ Bing.

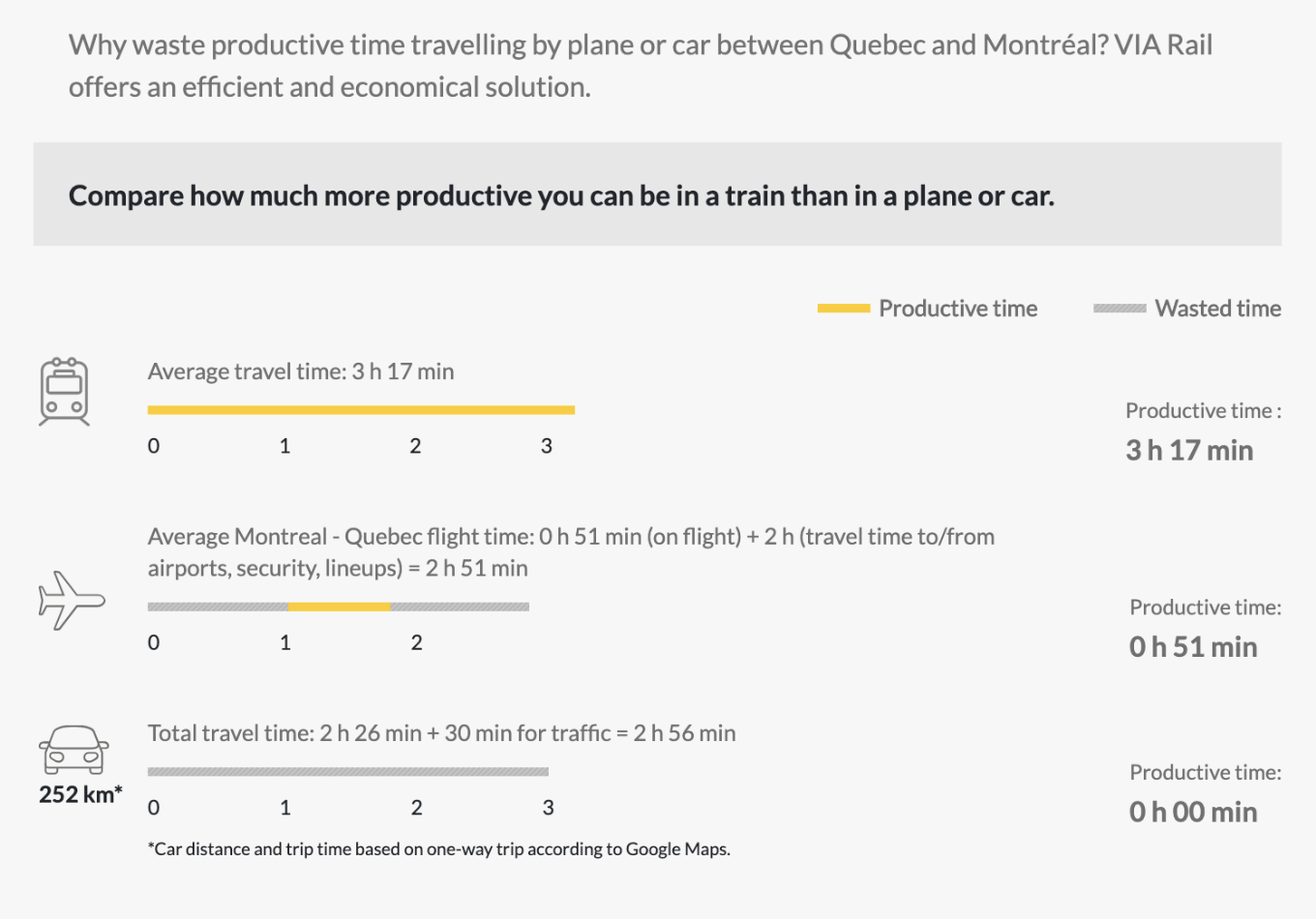

2) Play up the positive contrast between your AI-enabled chatbot and the alternatives. These could be your website, support email, or a human agent. Guide people to a reference point that highlights your virtual agent’s superiority, while also being honest. If your AI virtual agent can solve a higher percentage of support cases, this will be music to your customers’ ears – so tell them.

Use your knowledge of what people call about and what can be effectively contained with virtual chatbots. Offer people these comparisons when they are deciding how to contact customer service: average time to reply via phone call, email, or chat. As virtual agents become even more stable and reliable, eventually you will be able to point out those benefits too, but for now, users will probably respond best to their time-saving ability.

Find out where your virtual agent shines, call out that favorable comparison, and don’t be surprised when more customers start using it.

Leverage Social Norms for Adoption

How do rideshare drivers decide where to go? The app suggests it to them. But when drivers know that their peers are ignoring the suggestions, they’re much more likely to do the same. However, social proof can go both ways. Leveraging social pressure is a great way to get people to try a new tech trend.

The Behavioral Science Insight

Humans are social creatures and want to fit in. You can nudge people along the adoption curve by making it more salient that generative AI is (or is becoming) the norm, in ways that range from obvious to subtle:

- Descriptive norms: Tell people what others do. ‘9,368 other people have protected their trip with trip insurance.’

- Prescriptive norms: Tell people what others value. Sometimes this is communicated via a motto, but it can be numeric. ’10 out of 10 dentists recommend flossing.’

- Dynamic norms: If absolute values are low, try telling people about the rate of change. ‘We’ve doubled our donation rate in the last 24 hours!’

- Implicit norms: These norms are communicated through observation alone. People can observe behaviors like littering, spending habits, or car use; unfortunately for chatbots, private or online behaviors can be difficult to shift without explicit norm communication

How to Do It

You can always tell people how many other users have resolved their issue using a virtual agent – in this case, we would probably recommend proportions rather than absolute numbers to avoid also implying that people are banging down your doors with customer service complaints. For example, say ‘97% of return requests are successfully handled by our chatbot’ to redirect users.

One example of using a trusted messenger to deliver social proof is how ChatGPT let users share prompts and responses. When a friend or colleague shares a prompt with you, it’s social proof that anyone can use ChatGPT. As a bonus, it’s nice to see when that person is someone you trust.

In fact, not only did ChatGPT prompt sharing create social proof via referral, it also showed people how to use the product. This was clever – and leads right into our next section, a deep dive on how to teach people to use a virtual agent well.

Build a Mental Model By Limiting Scope

The use cases for a customer service bot can feel endless. Your bot could track orders, reset passwords, cancel incorrect orders, help users find or customize the right product within your offerings, etc.

But without a particular problem in mind, visitors to your website may shut down the bot before they understand its value. To someone who’s just browsing, an empty text box and the question ‘What can I help you with today?’ offers too many choices; it’s easier to X out and not ask anything.

The Behavioral Science Insight

When people are faced with too many options or presented with a complex or ambiguous ask, they may disengage altogether. ‘I’ll figure out what to ask you later‘ they say, and never return. This choice overload effect is especially true when the options are quite similar and when the decision-maker isn’t that invested.

When people are trying out new technologies for the first time, they may not have strong preferences, or their strong preference may be to stay just as they are (we call this status quo bias). To help people overcome this tendency for inaction, do this:

1) Show/tell them exactly how to use your customer service bot

2) Link use to existing behaviors to create habit stacks

Consider two famous assistants: Amazon’s Alexa & Microsoft’s Cortana. Both began with very different applications in mind than we have for them today. As a smart speaker, Alexa’s function was clear: play music. People understood this and bought in. To this day, Amazon smart speakers lead the industry.

Cortana, in contrast, tried to do it all. Software can succeed or fail for all kinds of reasons, but from a behavioral standpoint, the lack of a strong mental model for use may well have been Cortana’s downfall.

What can you learn from this? Alexa did two things really well: she gave clearly defined cues (‘You can ask me to play music’) that were clearly related to existing behavioral needs. She built a strong mental model and had a way to reinforce it. Both factors encouraged habit formation.

How to Do It

Don’t have your chatbot solve everything. Instead, start by limiting its scope. Maybe your virtual agent leads with ‘I solve order returns’ rather than ‘I’m the omnipotent god of customer service’.

In turn, that makes it easier to surface the virtual agent at the right time. Instead of popping up as soon as a website visitor opens your page, limiting scope means triggering the bot’s appearance in response to metrics that signal a customer service need, such as searching for returns or landing on the return FAQ.

Teach Users to Write Better Prompts

The Alexa example represents a very specific use case to increase adoption. But to really benefit from the new generation of virtual agents, users may need to craft specific, longer, and context-driven requests. However, decades of Google searches have trained people to give 2-3 keywords when they’re searching for something online. Users won’t get it right immediately. Here’s a behavioral primer on how to teach people a new, creative behavior.

The Behavioral Science Insight

Someone who contacts customer service is confused at best and frustrated at worst. Given this state of mind, this is not the time to expect them to learn a new skill.

They’ll quickly lose patience with building and iterating on AI prompts. Any frustration is likely to cause them to escalate their complaint. How can you help them prompt more creatively?

Provide clear constraints. One study of how constraints impact creativity asked people to write rhymes to say things like ‘feel better.’ Half of participants were asked to also include particular nouns. Which group wrote more creative rhymes? You decide:

No constraints group:

I will write you a letter

to help you feel better

Additional constraints group:

I hope that you soon feel better

than a mouse with 1,000 pounds of cheddar

Participants who had been given additional constraints wrote more creative rhymes even after the constraints were lifted – meaning that simply practicing with constraints increases creativity.

How to Do It

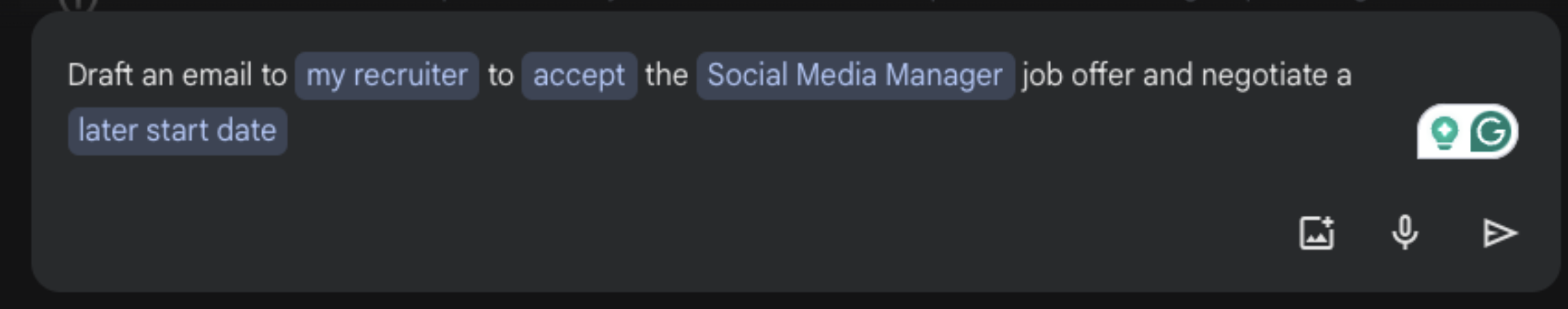

Show users exactly what to type. Google’s Gemini recently introduced a Mad Libs-esque template prompt that asks people to just adjust a few words. This helps build a new mental model (‘long phrases with connector words’) and seeds their first submissions, leading to more helpful initial responses. By immediately getting people the answer they need, Google reduces time to value and increases user satisfaction.

Furthermore, correct potential user errors with very intentional framing. Google’s ‘Did you mean…?’ response to searches flagged as misspellings is a good example of this. Alexa’s ‘I’m sorry, I’m having trouble understanding’, on the other hand, exemplifies how not to do this. It suggests that the tech is flawed without offering a positive example of how to fix this.

Humanize It

All this talk about tone leads us to another behavioral change you can make to a customer service bot: personality. Users will interact with a chatbot differently depending on how it’s designed, and these humanizing elements can have a big impact on how convincing or helpful people find the bot.

The Behavioral Science Insight

In retail and service contexts, people want to make good choices. They want to know what’s good and bad about their options so they can pick what’s best for them. Researchers hypothesized (and maybe you do too) that offering balanced information (instead of only positive information) would make people trust a recommendation from a chatbot more. But does the tone of that information affect how convincing it is? It turns out, yes.

Researchers designed a chatbot whose tone was either neutral, warm, or competent. The neutral assistant got right down to business, saying things like ‘You can use me to search for your desired product on this website.’ The warm chatbot said things like ‘I’m very happy to welcome you. Certainly, I would like to help you to find a suitable product for you on this website’ while the competent chatbot emphasized its expertise: ‘Due to the latest technology and years of experience, I’m able to quickly and efficiently find the best product for you on this website.’

Two interesting patterns showed up:

- People found the balanced information most convincing when it was presented by a chatbot designed to be competent.

- People found the chatbot most convincing when it was designed to be warm and presented balanced information.

How to Do It

Ask yourself what your user hopes to accomplish with your chatbot and how this interacts with your brand identity. Is it fun and aimed at forming a long-term relationship? Duolingo’s owl keeps things playful, even while nagging users to not lose their streak.

Are you a big bank addressing one-off issues? Stick to competence and present balanced information to help build trust.

Final Thought: Don’t Crack a Nut With a Sledgehammer

Now that you’re aware of these behavioral science strategies for improving your AI-powered customer service virtual agent, it’s also worth asking yourself: is the problem your bot solves better addressed upstream?

As a designer, PM, or engineer, you’re susceptible to hindsight bias: you know how your product works and what it’s supposed to do. This can make it hard to see your product through a new user’s eyes and anticipate the issues they’ll have.

To avoid over-solving a problem, don’t forget to look at the questions people are asking customer service. For example:

- Are they always trying to track orders? You may not need generative AI to solve that. Instead, redesign their order confirmation email to more clearly spotlight the tracking link.

- If they’re always searching to reset their password, add a Forgot Password link to the sign-in page.

The best way to find those root causes? Do a behavioral diagnosis. We can help with this. Interested in learning more about our scientific approach to design and grow solutions? Let’s talk.

These are just a few of the ways you can change behavior meaningfully through small interventions. We can help your health, finance, or tech company unlock big impact? Get in touch to learn more about our consulting services. Or learn more behavioral science by joining one of our bootcamps.