by Evelyn Gosnell | June 7, 2022 | attraction, Behavioral Economics, Experiments, Online Dating

When men say they prefer women without makeup, do they really mean it? A few years ago, my male friends suggested that women should wear less make-up because they’d look more attractive with a ‘natural look.’ My male friends weren’t alone—all over the internet, men claimed that women looked better without it, and even accused them of catfishing when they wore it. But this preference was inconsistent with what I’d actually experienced. I wanted to investigate.

As product managers and market researchers, we often turn to surveys to get answers. Those product designers will ask about user goals, customer preferences and the barriers they face to implementing behavior. At an apparel company where I used to work, the company mantra was ‘Speak with data.’ Whenever I suggested a new idea to my boss, he would cut me off with, ‘Are you sure customers want this? Go run a survey.’ Most companies are survey-happy, using polls to determine interest in new products in addition to the price customers would pay for them.

Where Surveys Fail

The problem? Asking people what they think is unreliable—people often don’t know. On top of that? They won’t even admit when they don’t know. One study suggests people would rather dig in their heels to a wrong answer than admit they don’t have a preference (Nisbett and Wilson 1977). This conundrum describes everyone from the users on an exercise app to customers trying a new chip flavor. I was pretty sure it also applied to the guys who advised women to lay off the lipstick.

Instead of just asking, I designed an experiment that would measure behavior.

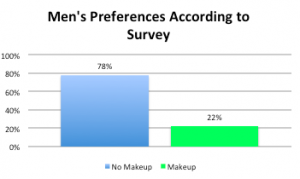

78% of the men I surveyed said they preferred women with the no-makeup look, while just 22% said they preferred makeup. In a company context, product managers would assume that this revealed a clear preference. Done deal. They’d make product changes accordingly, reorganize their team’s priorities and budget, and release something tailor-made for their target audience.

But let’s slow down for a second. This type of survey assumes that people’s preferences are fixed, and that they are able to accurately predict them. Behavioral science shows us that this is definitely not the case. The best way to understand behavior is to test it, not to ask about it.

Experimenting with a Dating App

Pushing my discomfort aside, I made two profiles for myself on the online dating website OKCupid. For one profile photo, I wore makeup, and for the other, I wore none. Everything else—the demographics, the outfit, the bio—was exactly the same. I didn’t respond to any messages on either account, and I kept each account active for the same amount of time.

Two profile images of Evelyn Gosnell with makeup (right) and without makeup (left). Photo credit Paula Majid Photography.

Drumroll for the results? The profile with makeup received messages from 34% more men. While my survey showed that men say they preferred women without makeup, they were actually much more likely to contact a woman who is wearing makeup than one who isn’t. The self-reported survey suggested the exact opposite of what their behavior did.

(Side note: 10 of the men messaged both profiles. And of these 10, 7 sent the same message to both. Not that I blame them. Christian Rudder, founder of OKCupid actually promotes this strategy in his book, Dataclysm. I wrote about his other findings before, but in case you missed it, find it here).

Why does it matter? It reveals that men may not actually know what they’re talking about; new studies suggest that men don’t like when women wear makeup, but want them to appear naturally flawless, hence the no-makeup makeup look. It also confronts our troubling tendency for Appearance Bias, a preference for attractiveness that skews our perception of the world, with serious consequences.

For our purposes, this also exemplifies why you should run experiments instead of surveys! Surveys have their place, like in focus groups or when providing anecdotes, but you’ll get better insights when you test true behavior instead of stated preferences. When these results inform a major business decision—designing a new product, reorienting an advertising campaign, reallocating a budget—the right data matters.

How can you start running experiments?

- Keep it simple. Running an experiment doesn’t need to be a massive endeavor. Start with your newsletter. Even splitting your email list and sending different content to each group is an experiment. Make sure you split the list randomly, reduce the variables and measure the same timeframe. A good experiment is an exact one.

- Have a Control Group: A control group, ideally selected through randomization, lets you evaluate the response of an intervention. Control and experimental treatment should be isolated as much as possible to identify what worked (and how and when). It can be difficult to do in an online setting, but it’s key to a successful evaluation.

- Set up a Feedback Mechanism: A feedback mechanism lets you observe how customers respond to different treatments, through behavioral and perceptual feedback metrics. Behavioral metrics measure actions—ideally, actual purchases. Perceptual metrics identify how customers think they’ll respond to your actions, through speculative feedback like surveys, focus groups, and other market research.

- Understand the Basics. This is a great HBR article that summarizes the how-to’s of running experiments in companies. It focuses on the two cornerstones of running experiments: setting up a control group and identifying feedback mechanisms. It also offers 7 actionable rules for getting the most out of your design.

- Experiment with everything. Leading companies increasingly experiment with everything—with promising results about consumer behavior, fascinating insights about human psychology, and amazing returns on their investments into behavioral research. Bing increased revenue per search by 10 to 25 percent per year using an experimentation-forward approach.

- Explore the data: Once results come in, you can isolate different demographics to assess where the experiment worked and who it worked for. Look at the differences in time scale, sample size, and response rate. Every data point could be a lead.

- Learn the foundations. Looking for a more in-depth guide to implementing behavioral design, including the best ways to design experiments to improve your research process and product? Check out our Behavioral Design online bootcamp.

Inspired by these insights into behavioral design and want to learn more? Sign up for the Irrational Labs newsletter and never miss a blog post, podcast episode, or behavioral economics insight.

This is a modified post that originally appeared on behaviorly.com on July 16, 2015.